SEO Checklist for Backend Developers

When it comes to search engine optimization (SEO), most people immediately think of frontend strategies—keywords, title tags, meta descriptions, and high-quality content. While these elements are crucial, the backend of a website plays an equally important role in determining how well a site performs in search engine rankings.

In fact, backend developers often contribute to SEO in ways they may not even realize. From the structure of the server responses to the configuration of URLs and server-side rendering, every aspect of the backend architecture influences how search engines crawl, index, and rank a website.

This comprehensive technical SEO checklist is specifically designed for backend developers. It will guide you through essential best practices to enhance a website’s SEO performance, user experience, and overall web health.

Table of Contents

- Why Backend SEO Matters

- Core Technical SEO Checklist for Backend Developers

- API Optimization for SEO

- Caching Strategies for Better Performance

- HTTP Headers and Their SEO Impact

- Error Handling and Redirects

- Schema Markup and Structured Data

- Security Best Practices

- Monitoring and Maintenance

- Final SEO Checklist Template for Backend Developers

Why Backend SEO Matters {#why-backend-seo-matters}

When we think about SEO, most people immediately focus on front-end content like keywords, headings, meta descriptions, and internal linking. While these elements are essential, what often goes unnoticed is the critical role backend systems play in ensuring that content is delivered quickly, securely, and accurately to both users and search engine crawlers.

Search engines like Google use sophisticated bots—like Googlebot—to crawl and index web pages. While these bots primarily analyze front-end code (HTML, CSS, JavaScript), their ability to effectively crawl and understand a website is heavily influenced by backend architecture. A poorly optimized backend can create bottlenecks that hinder performance, limit visibility in search results, and reduce overall site authority.

How Backend Functionality Impacts SEO

Even if your content is well-written and keyword-optimized, backend issues such as slow API response times, misconfigured redirects, or missing security headers can block search engines from properly crawling and indexing your site. This leads to lower rankings, a poor user experience, and missed opportunities for organic traffic.

To visualize this, imagine Googlebot trying to crawl a site:

Arrows show interactions with backend elements like databases, servers, HTTP headers, and caching layers.

If any of these interactions are too slow or misconfigured, the bot’s crawl process is disrupted, affecting how your content is evaluated and ranked.

Key SEO Metrics Influenced by Backend Performance

Here’s a breakdown of critical SEO factors directly affected by backend development:

Page Speed

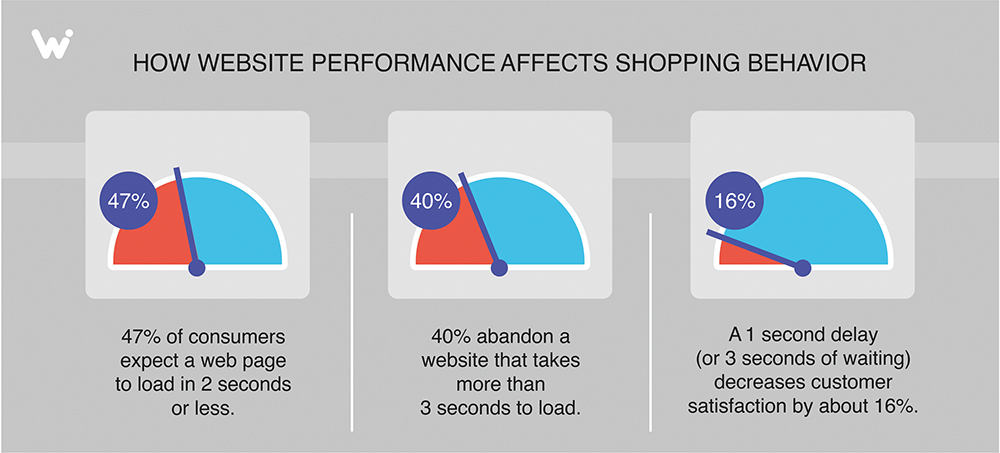

- Backend APIs, server response times, and database queries all influence how fast a page loads.

- Faster load times improve user experience and are directly linked to better search rankings.

Mobile Usability

- A mobile-friendly backend infrastructure ensures that dynamic content loads properly on various devices.

- Techniques like server-side rendering (SSR) and responsive design affect how Google evaluates mobile performance.

Crawlability

- Search engine bots need a clean, accessible path through your site.

- Improper routing, excessive URL parameters, or broken internal links can block crawlers.

Indexability

- If crawlers can’t fetch or process your pages due to server errors or complex scripts, those pages won’t be indexed.

- Proper header responses (like

200 OK) and crawlable content are essential.

Redirects

- Misconfigured 301 or 302 redirects can dilute SEO equity.

- Avoid redirect chains and loops that confuse both users and search engines.

HTTP Status Codes

- Status codes like 404 (Not Found), 500 (Server Error), or 503 (Service Unavailable) directly affect your SEO.

- Correct use of 301 redirects, 200 OKs, and proper error handling helps guide crawlers efficiently.

Structured Data Delivery

- Delivering JSON-LD structured data from the backend ensures rich results and better SERP (Search Engine Results Page) enhancements.

- This requires coordination between content management and backend rendering.

Core Technical SEO Checklist for Backend Developers {#core-technical-seo-checklist}

A well-optimized backend infrastructure forms the foundation for a high-performing, search-friendly website. Without clean code, efficient server responses, and proper configuration, even the best content can fail to achieve its SEO potential. Backend developers play a crucial role in technical SEO by ensuring that content is accessible, crawlable, and delivered quickly and reliably to both users and search engine bots.

Below is a core checklist of technical SEO practices every backend developer should implement to improve search engine visibility and overall site performance.

1. Implement Server-Side Rendering (SSR)

Why It Matters:

Not all search engines can fully render JavaScript-heavy pages, especially when content is generated on the client side. If key content is only available after JavaScript execution, it may not be indexed properly.

Best Practices:

- Use frameworks that support SSR, such as Next.js (for React) or Nuxt.js (for Vue).

- Ensure critical content, metadata, and schema are rendered on the server before being sent to the browser.

- Consider using hybrid rendering (Static + SSR) for performance and flexibility.

2. Use Clean, SEO-Friendly URL Structures

Why It Matters:

Well-structured URLs are easier for both users and search engines to read and understand. Descriptive URLs improve click-through rates and help search engines associate keywords with specific pages.

Best Practices:

- Keep URLs short, descriptive, and keyword-rich (e.g.,

/product/shoes/nike-air-max). - Use hyphens

-to separate words rather than underscores_. - Avoid unnecessary dynamic parameters like

?id=123&category=shoesunless absolutely required. - Use consistent lowercase URLs to avoid duplicate content issues.

3. Configure XML Sitemaps and Robots.txt

Why It Matters:

Sitemaps and robots.txt files help search engine bots understand how to crawl and index your site efficiently. A missing or poorly configured setup can result in missed pages or wasted crawl budget.

Best Practices:

- Dynamically generate XML sitemaps and update them automatically when content changes.

- Ensure the sitemap is accessible at

/sitemap.xmland linked in yourrobots.txt. - Use

robots.txtto guide bots away from unnecessary pages like admin panels or duplicate filters (e.g.,/cart,/checkout,/filter?color=blue). - Avoid blocking resources (CSS/JS) that are needed for proper page rendering.

4. Optimize for Mobile Performance

Why It Matters:

Google uses mobile-first indexing, which means it primarily evaluates the mobile version of your website. A backend that fails to serve mobile-optimized content or APIs can negatively impact rankings.

Best Practices:

- Set the correct viewport meta tag:

<meta name="viewport" content="width=device-width, initial-scale=1">- Serve content that adapts based on the user’s device or screen size.

- Ensure that backend APIs can deliver tailored data for mobile interfaces (e.g., smaller images, mobile-specific UI content).

- Validate your site with Google’s Mobile-Friendly Test tool.

API Optimization for SEO {#api-optimization-for-seo}

Although search engine bots typically don’t interact directly with your APIs, the performance and structure of your APIs have a significant indirect impact on SEO. APIs power dynamic content, influence page load speed, affect interactivity, and support the delivery of structured data—all key components that search engines evaluate to determine page quality and ranking.

For backend developers, optimizing API performance is crucial for enhancing technical SEO and ensuring a seamless user experience across devices and platforms. Below is a breakdown of the best practices for API optimization with SEO in mind.

1. Prioritize API Speed and Response Efficiency

Why It Matters:

Fast APIs enable faster content rendering on the frontend, reducing page load time—a direct ranking factor in Google’s Core Web Vitals. Sluggish APIs can delay rendering and frustrate users, negatively affecting bounce rates and engagement.

Best Practices:

- Minimize payload sizes by removing unused fields and unnecessary data from API responses.

- Enable HTTP compression using Gzip or Brotli to reduce the size of transmitted data.

- Flatten deeply nested JSON structures, as complex data can increase parsing time and memory usage on the client side.

- Monitor API latency using tools like Postman, New Relic, or Datadog.

2. Implement Efficient API Caching

Why It Matters:

Caching reduces the number of server requests and speeds up data delivery, which is crucial for both frontend rendering and search engine crawlers that evaluate site performance.

Best Practices:

- Use in-memory caching tools like Redis or Memcached to store frequently requested API responses.

- Implement HTTP caching with proper

Cache-Controlheaders to control browser and intermediary caching behavior. - For static or rarely-changing data, use long-lived caching directives (e.g.,

Cache-Control: max-age=86400).

3. Reduce Unnecessary API Calls

Why It Matters:

Frequent or redundant API requests can increase server load, delay rendering, and make your pages appear sluggish to users and bots alike.

Best Practices:

- Batch API requests where possible to reduce the number of round-trips.

- Use lazy loading for non-essential data (e.g., loading user reviews only after the user scrolls to that section).

- Leverage GraphQL to request only the specific data fields required for a page, minimizing over-fetching.

4. Implement Robust API Error Handling

Why It Matters:

Poorly handled API errors can lead to broken page components, rendering issues, and reduced crawlability—all of which impact your SEO performance.

Best Practices:

- Return accurate and SEO-friendly HTTP status codes (e.g.,

200 OK,404 Not Found,500 Internal Server Error) based on the outcome of the API request. - Avoid exposing stack traces or sensitive error details in production environments, as they may reveal vulnerabilities or confuse crawlers.

- Include clear and user-friendly error messages for failed data loads to maintain a consistent UX.

Caching Strategies for Better Performance {#caching-strategies}

Effective caching is one of the most powerful ways to improve website performance, reduce server load, and enhance the user experience. It plays a vital role in on-page SEO, as faster-loading pages lead to better engagement, lower bounce rates, and improved search engine rankings. Below are the key caching strategies you should implement:

1. Browser Caching

Browser caching allows frequently accessed files (like CSS, JavaScript, and images) to be stored locally in a user’s browser. This significantly reduces the number of HTTP requests and speeds up page load times for returning visitors.

- Set Cache-Control Headers: Configure your server to send appropriate

Cache-ControlorExpiresheaders for static assets. For example, you can set long expiration dates for files that don’t change often. - Use Cache-Busting Techniques: When updating assets, append a version number or hash to the file name (e.g.,

/main.v1.2.3.js). This forces the browser to fetch the new version without affecting other cached files.

SEO Tip: Faster repeat visits increase user satisfaction and reduce bounce rates, signaling positive user experience to search engines.

2. Server-Side Caching

Server-side caching reduces the need to regenerate pages or content for each request, resulting in lower server load and faster response times.

- Page Caching: Store full HTML pages that don’t change frequently. This is ideal for blog posts, product pages, or static landing pages.

- Object Caching: Cache database query results or objects in memory using tools like Memcached or Redis.

- Use Reverse Proxies: Implement caching proxies like Varnish, NGINX, or Apache Traffic Server to handle caching outside your application server. These tools can serve cached content at lightning speed.

Performance Insight: Reducing backend processing improves Time to First Byte (TTFB), a key performance metric for SEO.

3. Content Delivery Network (CDN) Caching

CDNs distribute your content across multiple servers worldwide, allowing users to download resources from the location closest to them.

- Serve Static Assets via CDN: Offload images, scripts, stylesheets, and videos to a CDN provider like Cloudflare, Akamai, or Amazon CloudFront.

- Enable Edge Caching: Let the CDN cache full pages at the edge level, minimizing calls to your origin server and speeding up load times globally.

SEO Advantage: Serving content quickly to international audiences improves user experience and search visibility across different regions.

HTTP Headers and Their SEO Impact {#http-headers-seo}

HTTP headers are an essential part of every web request and response. While often overlooked, they play a significant role in search engine optimization (SEO), web performance, and site security. Configuring the right headers helps search engines index your site correctly, protects against vulnerabilities, and ensures efficient content delivery.

1. SEO-Related HTTP Headers

These headers directly influence how search engines interpret, crawl, and index your website:

- X-Robots-Tag: This header gives granular control over how search engines index specific resources like PDFs, images, or non-HTML files. For example,

X-Robots-Tag: noindex, nofollowcan prevent certain files from appearing in search results. - Link: rel=”canonical”: The

rel="canonical"header is used to point search engines to the preferred version of a URL, helping to consolidate duplicate content signals and avoid SEO penalties. It’s especially useful for non-HTML content or when specifying canonical links via headers is more appropriate than HTML tags. - Content-Type: This header tells browsers and bots the format of the returned resource (e.g.,

text/html,application/json,image/png). A correctContent-Typeensures proper rendering and parsing, preventing misinterpretation of content by search engines.

SEO Insight: Search engines rely on correct header configurations for proper crawling and indexing. Misconfigured headers can lead to indexing errors or duplicate content issues.

2. Security-Enhancing HTTP Headers

Security headers protect your site and its users, indirectly supporting SEO by building trust and preventing attacks that could damage rankings or user experience.

- Content-Security-Policy (CSP): Helps prevent XSS (Cross-Site Scripting) attacks by controlling which resources (scripts, styles, images) the browser is allowed to load.

- Strict-Transport-Security (HSTS): Forces browsers to use HTTPS instead of HTTP for all future requests, enhancing both security and performance.

- X-Frame-Options: Prevents your website from being embedded in an iframe on another domain, which helps block clickjacking attacks.

SEO Tip: A secure site builds user trust and satisfies search engine requirements for HTTPS as a ranking signal.

3. Performance-Optimizing HTTP Headers

Performance headers manage how content is cached and validated, improving load speeds and server efficiency—both crucial for SEO.

- ETag (Entity Tag): Provides a unique identifier for a specific version of a resource. When the resource hasn’t changed, the server can respond with a

304 Not Modifiedstatus, saving bandwidth. - Last-Modified: Indicates the last time a resource was changed. Browsers and proxies can use this to determine whether to fetch the file again or use a cached version.

SEO Benefit: Efficient caching via headers like

ETagandLast-Modifiedreduces load times and improves Core Web Vitals, which directly affect search rankings.

Error Handling and Redirects {#error-handling-redirects}

Proper error handling and an efficient redirect strategy are critical to maintaining search engine rankings and delivering a seamless user experience. Incorrect or mismanaged HTTP status codes and redirects can negatively impact crawlability, indexation, and user engagement—all key components of technical SEO.

1. Understanding HTTP Status Codes and SEO Impact

HTTP status codes inform browsers and search engine crawlers about the outcome of a request. Using the correct status codes helps search engines understand how to treat your content.

- 200 OK: This status means the request was successful, and the page is available. Ensure that indexable content returns a

200 OKto be properly crawled and ranked. - 301 Moved Permanently: A 301 redirect tells search engines that a URL has permanently moved to a new location. It passes most of the original page’s link equity (ranking power), making it the preferred redirect method for SEO.

- 404 Not Found: This indicates that the requested page doesn’t exist. While a

404is valid for missing pages, make sure it’s used intentionally and accompanied by a user-friendly custom page. - 500 Internal Server Error: This signals a server-side issue. Frequent

500errors can harm your crawl budget, degrade user trust, and lead to lost rankings. These should be fixed immediately.

SEO Tip: Search engines expect consistent, accurate status codes. Misusing them (e.g., returning 200 for an error page) can confuse crawlers and affect indexing.

2. Custom 404 Pages That Support SEO

Instead of showing a default browser error, a custom 404 page can turn a dead end into an opportunity to re-engage users.

- Include Helpful Navigation: Offer links to popular pages, recent posts, or a sitemap to guide users back on track.

- Add a Search Box: Let users search your site directly from the 404 page to quickly find what they were looking for.

- Maintain Brand Voice and Design: A branded 404 page improves user experience and reduces bounce rates, which are behavioral signals considered by search engines.

User Experience Insight: A well-designed 404 page helps retain users, keeping them on your site longer and reducing negative SEO signals.

3. Smart Redirect Strategy for SEO

Redirects are often necessary, especially during site migrations, URL structure changes, or content consolidation. However, how you implement them makes a big difference in SEO outcomes.

- Avoid Redirect Chains and Loops: A redirect chain occurs when URL A redirects to B, which then redirects to C. This adds load time, confuses crawlers, and may dilute link equity. Always redirect directly from A to C.

- Use Canonical URLs: If multiple URLs serve the same content, set the preferred version using the

rel="canonical"tag. This avoids duplicate content issues and consolidates ranking signals. - Choose 301 Over 302: A

302 Foundis a temporary redirect, and search engines may not transfer SEO value. Use301 Moved Permanentlywhen the redirect is permanent.

Search Engine Guidance: Clean, direct redirects help Google and Bing index your content correctly, preserving your site’s authority and rankings.

Schema Markup and Structured Data {#schema-markup-structured-data}

Schema markup—also known as structured data—is a type of code that helps search engines better understand the content on your web pages. Implementing structured data correctly can improve your visibility in search results through enhanced listings, known as rich snippets. These visual enhancements can include ratings, images, FAQs, breadcrumbs, and more, ultimately increasing your click-through rate (CTR) and overall search performance.

1. JSON-LD Integration for SEO

JSON-LD (JavaScript Object Notation for Linked Data) is the recommended format by Google for adding structured data. It allows you to insert structured data into your web pages in a clean, non-intrusive way—usually within the <head> or just before the closing </body> tag.

- Inject Structured Data via Backend: Your CMS or server-side rendering logic should dynamically insert the JSON-LD markup during page generation. This ensures search engines see the markup in the initial crawl.

- Use Relevant Schema Types: Choose schema types that align with your content. Common examples include:

Article– for blog posts and news contentProduct– for eCommerce product listingsFAQPage– for FAQ sectionsOrganization– for business and brand identityBreadcrumbList– for enhanced navigation in search listings

SEO Tip: Implementing schema markup can help your pages qualify for rich results, improving visibility and organic traffic.

2. Dynamic Schema Generation

For websites with frequently changing or database-driven content (e.g., blogs, eCommerce stores, news portals), structured data should be generated dynamically to ensure accuracy and scalability.

- Auto-Generate Schema from Data: Pull key information (like product name, price, stock status, or review ratings) directly from your database to create accurate and up-to-date schema for each page.

- Adapt to Content Type: For example, a blog post might use

Articleschema with properties likeauthor,datePublished, andheadline, while a product page could useProductandOfferschemas. - Use Conditional Logic: Generate different schema structures based on the type or category of content—ensuring relevance and avoiding overuse of generic schema types.

Scalability Insight: Automating schema creation reduces manual work and keeps your structured data consistent across large websites.

3. Validation and Testing Tools

After implementation, it’s critical to ensure that your schema is correctly formatted and eligible for rich results.

- Use Google’s Rich Results Test: Test any page to confirm whether it’s eligible for enhanced display in Google Search.

- Validate with Schema.org Tools: Double-check the syntax and structure of your JSON-LD against official Schema.org definitions to ensure full compliance.

- Monitor via Google Search Console: Track performance and detect errors in the “Enhancements” section of Search Console for types like FAQs, products, events, etc.

Technical SEO Note: Valid, error-free structured data improves your site’s compatibility with search engines and supports better semantic understanding of your content.

Security Best Practices {#security-best-practices}

A secure website is not just critical for protecting user data—it’s also a ranking factor in Google’s algorithm. Search engines prioritize websites that demonstrate strong security practices because they provide a safer and more trustworthy experience for users. Implementing the following website security best practices will not only protect your data but also enhance your SEO and user trust.

1. HTTPS Everywhere – SSL as a Ranking Signal

Migrating your website to HTTPS is a must in modern web development. HTTPS secures the communication between the user’s browser and your server through SSL/TLS encryption.

- Install an SSL Certificate: Obtain an SSL certificate from a trusted Certificate Authority (CA). This ensures that sensitive information such as login credentials, credit card details, and personal data are transmitted securely.

- Force HTTPS with 301 Redirects: After enabling HTTPS, redirect all HTTP traffic to HTTPS using 301 permanent redirects. This ensures that search engines index only the secure versions of your pages and that link equity is preserved.

- Update Internal Links and Canonicals: Ensure all internal links and canonical tags point to HTTPS URLs to avoid mixed content issues or duplicate indexing.

SEO Insight: Google explicitly stated that HTTPS is a ranking factor. Additionally, browsers mark HTTP sites as “Not Secure,” which can hurt trust and increase bounce rates.

2. Secure Cookies – Protect Session Data

Cookies are commonly used for managing user sessions, but if not secured properly, they can expose users to risks like session hijacking.

- Use the

SecureFlag: This ensures cookies are only sent over HTTPS connections, protecting them from being intercepted. - Use the

HttpOnlyFlag: Prevents client-side scripts (like JavaScript) from accessing cookies, reducing the risk of cross-site scripting (XSS) attacks. - Consider the

SameSiteAttribute: SettingSameSite=StrictorSameSite=Laxlimits cookie transmission in cross-site requests, helping mitigate CSRF (Cross-Site Request Forgery) attacks.

User Trust Boost: Securing cookies ensures that session data and login states remain protected, which is especially important for eCommerce and membership sites.

3. Bot Protection – Keep Out the Bad Without Blocking the Good

While Googlebot and other legitimate crawlers are essential for SEO, harmful bots can slow down your site, consume server resources, and even scrape or spam your content.

- Use CAPTCHA Systems: Implement reCAPTCHA v3 or similar tools to verify human users during form submissions or login attempts without disrupting the user experience.

- Implement Rate-Limiting and Firewall Rules: Use Web Application Firewalls (WAF) to block abusive traffic patterns, and throttle access for suspicious IP addresses.

- Robots.txt and Bot Detection: Ensure your

robots.txtfile allows access to valuable SEO bots like Googlebot while blocking known malicious agents. Advanced solutions can also identify bots by behavior rather than relying on user-agent strings alone.

Technical SEO Tip: Be careful not to unintentionally block search engine crawlers when applying bot protection—this can prevent your pages from being indexed.

Monitoring and Maintenance {#monitoring-and-maintenance}

SEO is not a one-time task—it requires ongoing monitoring, auditing, and proactive maintenance. Keeping your website optimized means constantly analyzing performance data, identifying technical issues early, and ensuring your content remains crawlable, indexable, and error-free. A well-monitored site not only avoids ranking drops but also improves user experience and site reliability over time.

1. Log Monitoring – Gain Visibility Into Search Engine Behavior

Server log analysis gives you a behind-the-scenes view of how search engines interact with your site. By reviewing server logs, you can identify crawl patterns, detect anomalies, and optimize your site structure for better search performance.

- Track Crawl Activity: Monitor which pages are being crawled most often by search engine bots like Googlebot. This helps ensure your most important content is being prioritized.

- Identify Frequent Errors: Look for recurring

404 Not Foundand500 Internal Server Errorresponses. These errors can hurt SEO by wasting crawl budget and providing a poor user experience. - Spot Unwanted Bots: Logs can help uncover malicious or spammy bots that might be slowing down your site or consuming resources unnecessarily.

SEO Tip: Regular log reviews can help optimize your crawl budget, especially for large websites with thousands of pages.

2. SEO Tools – Track Health and Uncover Optimization Opportunities

Using trusted SEO tools is essential for keeping your website in top shape. These tools help uncover hidden issues, track performance metrics, and find opportunities to improve rankings.

- Google Search Console: Provides detailed insights into indexing status, crawl errors, Core Web Vitals, and search queries. It also alerts you to structured data issues and manual actions.

- Screaming Frog SEO Spider: A powerful desktop crawler that simulates how search engines crawl your website. It highlights broken links, redirect chains, duplicate content, and missing metadata.

- Ahrefs & SEMrush: Comprehensive SEO platforms that perform full site audits, track keyword rankings, monitor backlinks, and detect content gaps.

Technical SEO Insight: Regular audits help you stay ahead of issues that could affect crawlability, speed, or indexation.

3. Automated SEO Testing – Catch Problems Before They Go Live

Integrating automated testing into your development workflow ensures that technical SEO issues are detected before your content reaches users or search engines.

- Header & Redirect Validation: Test HTTP status codes, redirect behavior, and canonical tags automatically during builds or deployments.

- Structured Data Validation: Use tools like Google’s Rich Results Test or Schema Markup Validator in your CI/CD pipeline to ensure all schema remains valid and up to date.

- Pre-Deployment SEO Checks: Incorporate SEO checks into your Continuous Integration/Continuous Deployment (CI/CD) process to prevent misconfigurations like missing meta tags, broken links, or blocked resources from reaching production.

Efficiency Boost: Catching SEO errors early in the development cycle saves time, protects rankings, and ensures a seamless user experience.

Final SEO Checklist Template for Backend Developers {#final-seo-checklist-template}

| Checklist Item | Done (✓) |

|---|---|

| Clean, readable URLs | |

| Proper use of HTTP status codes | |

| Dynamic XML sitemap generation | |

Correct robots.txt configuration | |

| API response times < 200ms | |

| GZIP/Brotli compression enabled | |

| Caching headers for static content | |

| Edge caching via CDN | |

| Proper redirect strategy (no chains) | |

| Custom 404 pages | |

| Canonical tags in headers | |

| JSON-LD structured data | |

| HTTPS enforced sitewide | |

| Secure headers (HSTS, CSP, etc.) | |

| Logs monitored for crawl issues | |

| Regular SEO audits using tools |